Introduction

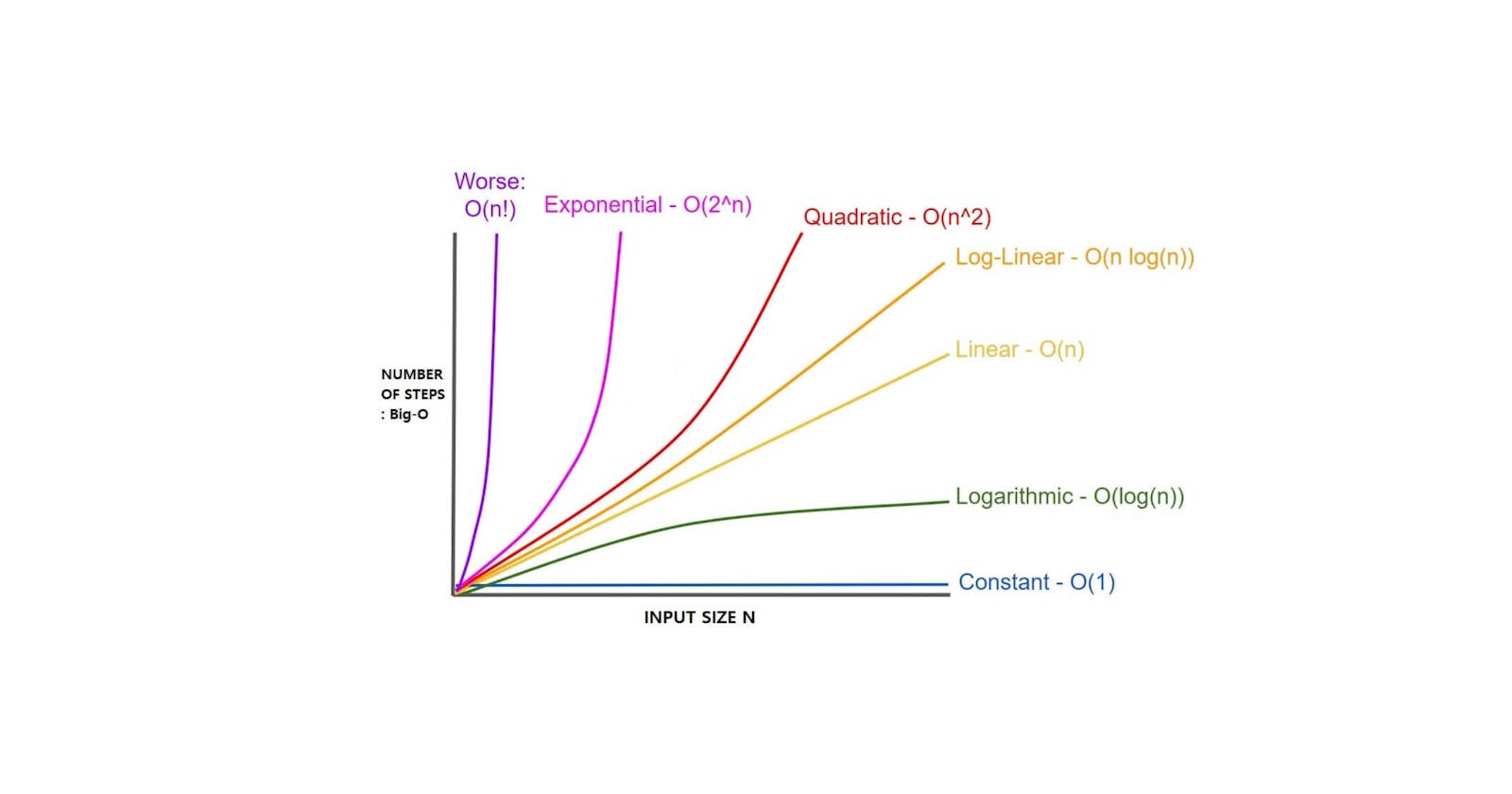

When analyzing algorithms, the time complexity is a commonly used metric to compare and evaluate the efficiency of different approaches. Time complexity describes the amount of time an algorithm takes to complete its task, based on the size of the input. A lower time complexity generally means that the algorithm is faster and more efficient, especially as the input size increases.

However, it's important to note that lower time complexity doesn't always equate to better performance in practice. Several factors can impact the actual performance of an algorithm, including the complexity of individual operations, the efficiency of the underlying hardware, and the implementation details of the algorithm.

Let's get to the code

Today, we will compare two functions countLetters1 and countLetters2 that count the number of occurrences of each letter in an input list. The code for the two functions is provided below.

Function1: countLetters1

function countLetters1(input_list) {

let res = {};

const letters = 'ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz0123456789';

for (let i = 0; i < letters.length; i++) {

let count = 0;

for (let j = 0; j < input_list.length; j++) {

if (input_list[j] === letters[i]) {

count++;

}

}

res[letters[i]] = count;

}

return res;

}

Function1: Time complexity

The time complexity of countLetters1 is O(62 * n) which is O(n) in terms of Big O notation. where n is the number of elements in the input list. This is because the function loops through each character in the set ['A'..'Z', 'a'..'z', '0'..'9'], 26 + 26 + 10 which has a total of 62 characters. For each character, it then loops through the input list and counts the number of occurrences of the character.

Function2: countLetters2

function countLetters2(input_list) {

let res = {};

mergeSort(input_list);

let curCount = 0;

let curChar = '';

for (let i = 0; i < input_list.length; i++) {

if (i === 0 || input_list[i] === input_list[i - 1]) {

curCount++;

} else {

curCount = 1;

}

curChar = input_list[i];

res[curChar] = curCount;

}

return res;

}

Function2: Time complexity

The time complexity of countLetters2 is O(n * log(n)), where n is the size of the input list. This is because the merge_sort function has a time complexity of O(n * log(n)), and the second loop runs n times, for a total of n * log(n) operations.

To compare the two functions, let's consider an input list of size n = 1 million.

For this input size, countLetters1 will require approximately 62 * 1 million = 62 million operations, while countLetters2 will require approximately 1 million * log2(1 million) + 1 million ≈ 21 million operations. This indicates that countLetters2 will be faster than countLetters1 for this input size.

Conclusion

In conclusion, From the above example, we can conclude that countLetters2 will execute faster than countLetters1 on the same hardware configuration and under identical situations. However, in the real world, readability often takes precedence in production code, and the performance is patched through the hardware.

I hope this article was helpful to you. Consider leaving a thumbs up 👍 if you liked it.